Everything you read about the Regin (Reg In or Reagan) malware indicates that it is one of the most "sophisticated" malware since StuxNet.

Reading this irks me since many in our industry read "sophisticated malware" and they think "I can't detect this stuff". Actually you can! You just have to look and applying The Malware Management Framework could have discovered.

Before taking a look at how to discover Regin, what is sophistication as far as malware needs to be discussed as the Information Security industry really needs to change how It discusses malware. Malware should be broken into two components as far as sophistication:

1. Function

2. Artifacts

Function of the malware, the application portion is different than the artifacts or indicators that exist on the system. Sophisticated function does NOT make malware too sophisticated to discover.

Discovery and Function are not synonymous when it comes to malware. As a Blue Team Defender and IR person, the first order of business is to discover malware. What the malware does application wise is secondary and part of the malware analysis stage that occurs after malware is discovered.

Malware is made up of files and processes. If malware exists on disk which 95% does, it can be discovered. Now that function and artifacts have been discussed and split as far as sophistication, let's take a look at discovering Regin as it is NOT that sophisticated that we could not discover it.

Two clever functions Regin used are to use NTFS extended attributes to hide code that pointed to the Windows \fonts and \cursors directories. But the main payload was stored on disk mimicking a valid file, not hidden in any way. Regin has pieces littered in many directories, but the main payload is fairly obvious if you watch for a few indicators.

The second clever thing that Regin did is to store some of the payload in the space between the end of the partition and the end of the disk as most drives have empty blocks that can hold a small partition, enough for malware to be stored. You would have to use disk utilities to find an odd partition area.

What we are after in malware detection is some indicator that we can focus on that indicates a system is infected. The goal is to find one or more artifacts, not necessarily every artifact since once you find an artifact it will lead you to more details once you begin detailed analysis. The goal of malware discovery is to discover something so that you can take some action. You can do nothing, not advised, you can re-image a system, or find enough of the malware, what we call the head of the snake, and cut off the malware's head to clean the system enough to move on. A lot of organizations do not have the resources to do full analysis up front and business and management just wants you to clean it up and move on in many cases.

Regin drops the initial malware Stage 1 & 2 into the following directories:

• %Windir%

• %Windir%\fonts

• %Windir%\cursors (possibly only in version 2.0)

The main Stage 1 payload is a driver (.SYS):

• usbclass.sys (version 1.0)

• adpu160.sys (version 2.0)

This driver is the thing you should be able to discover. According to the reports, the only malware component that is visible. This driver is added to the Windows main directory with the other components using the first clever feature by storing the other parts of the malware into the NTFS Extended Attributes in other directories. You discover this driver and you found the head of the snake!

The malware can also store some of the code in the Registry. Regin used two keys for this purpose:

• HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Class\

• HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\RestoreList\VideoBase (possibly only in version 2.0)

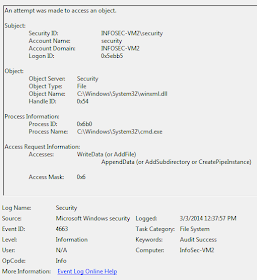

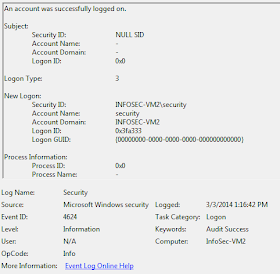

If you use Auditing like I recommend for many directories and registry keys, you could have detected the additions to the Class Key as this location does not change much.

Since malware can be stored just about anywhere in the registry that the active user can access, discovery of this part of the malware is challenging. You are usually led to additional artifacts when you analyze the malware and find a string pointing you to a registry key, but auditing keys can help you discover malicious behavior, so consider using auditing on main keys that you find in your Malware Management reviews of reports such and Regin, Cleaver and many others to help build your detection abilities. Be sure to test the audit settings as some keys generate crazy noise and you will want to disable auditing in these locations.

After the initial Stage 1 driver loads, the encrypted virtual file system is added at the end of the partition instead of using the standard disk Stage 3 is stored in typical directories:

• %Windir%\system32 • %Windir%\system32\drivers

• HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Class\

This portion should be encrypted and harder to detect, but there is the registry key! So auditing is your friend here.

Hopefully this shows how even the 'most sophisticated malware' could and can be discovered if you practice the Malware Management Framework. The head of the snake was visible and detectable with Regin as well as registry keys used. Read the reports, pull out the indicators, tweak your tools or scripts and begin WINNING!

Read more on the Regin malware and use Malware Management to improve your malware discovery Skillz and Information Security Posture.

http://www.symantec.com/content/en/us/enterprise/media/security_response/whitepapers/regin-analysis.pdf

https://firstlook.org/theintercept/2014/11/24/secret-regin-malware-belgacom-nsa-gchq/

https://securelist.com/files/2014/11/Kaspersky_Lab_whitepaper_Regin_platform_eng.pdf

https://www.f-secure.com/weblog/archives/00002766.html

#InfoSec #HackerHurricane